2024/12/27

On Tuesday, September 10, 2024, the final presentation session for the 10 developers selected under GENIAC’s support program for competitive foundation model development was held at Kudan Kaikan in Tokyo. Part 1 of the event featured presentations by developers on their self-developed models, as well as summary comments from hyperscalers who provided computational resources. Part 2 included an awards ceremony recognizing contributions to technology, community, and presentation quality. This article provides an overview of the highlights from the session.

Part 1: Diverse Results from Developers and Hyperscaler Keynotes

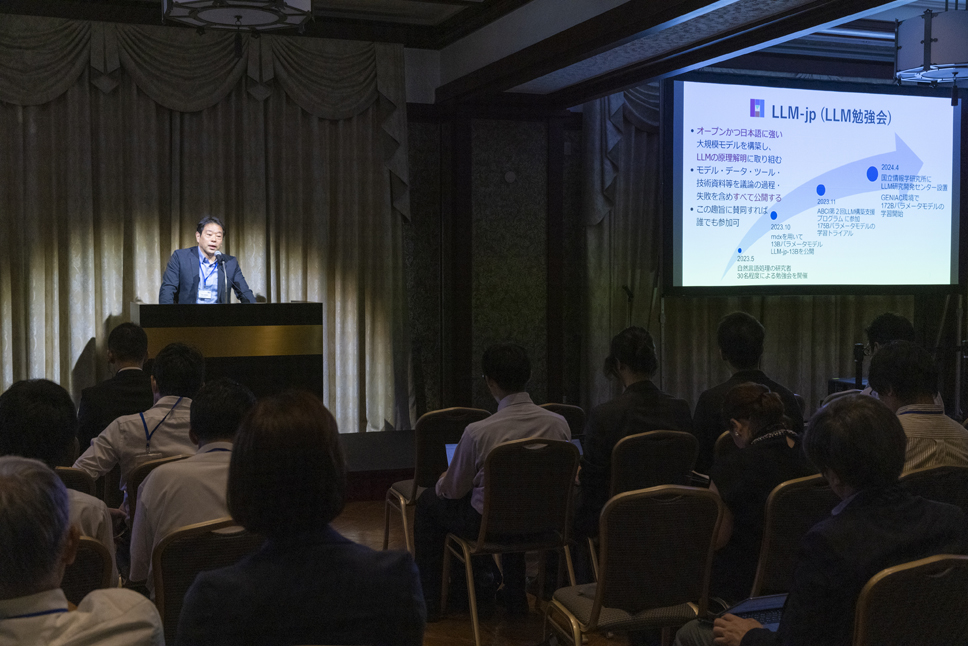

The event began with an opening address by Takuya Watanabe, Director of the Information Processing Industry Office, Ministry of Economy, Trade and Industry (METI). Drawing on key points from the AI Strategy Council’s interim discussions, he emphasized the importance of generative AI in creating global “wealth” through improvement cycles and reflected on the program’s achievements over the past six months.

Next, the developers selected for the program presented the outcomes they achieved.

“In this program, we developed a system that combines foundation models for creating low-cost custom models and high-precision Retrieval-Augmented Generation (RAG) to overcome the trade-offs between quality and cost in LLM (Large Language Model) development.”

“When constructing a GPT-3-level large-scale language model in proficient in Japanese, we decided to make all outcomes, including failures and improvement methods, openly accessible. The 172B (172 billion) parameter model we developed will continue its pre-training even after the GCP resource usage period ends.”

“We achieved performance surpassing GPT-4 through unique fine-tuning and Depth Up-Scaling of foundational models like Llama. We are also working to enhance specialized learning for applications in Japanese administrative and regulatory fields.”

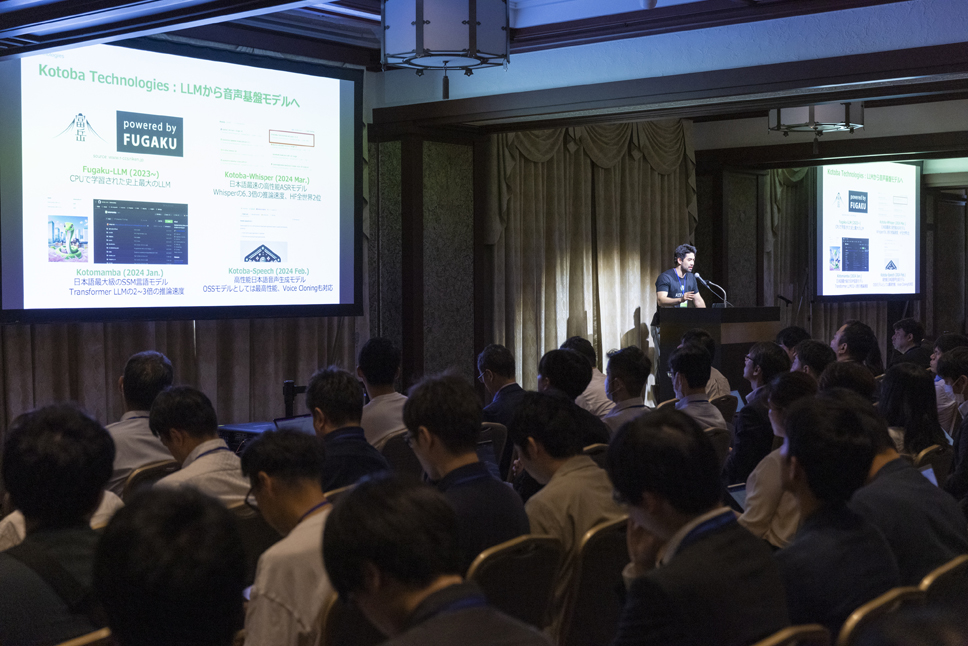

“We developed 1.3B and 7B parameter versatile speech foundation models capable of generating fluent Japanese, voice cloning, simultaneous interpretation, and offline usage. Additionally, we plan to release commercial APIs and chatbot platforms soon.”

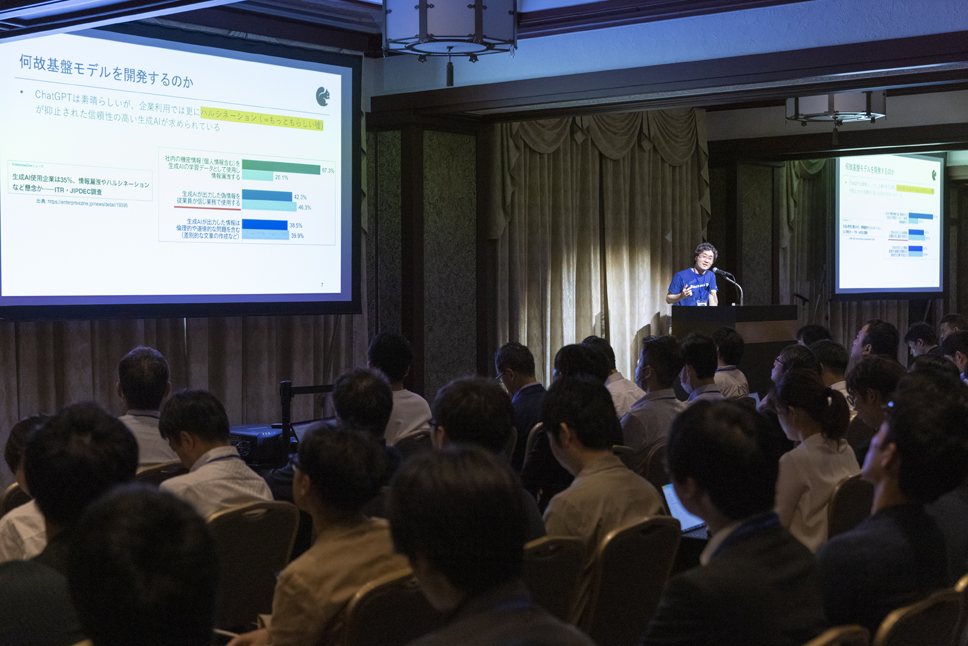

“We developed Stockmark LLM-100b in full scratch.In business adaption of generative AI one of big issue is hallucinations however our LLM can suppresses hallucinations significantly. Also this LLM can learn corporate confidential information for versatile business applications.”

“We developed a compact yet high-performance foundation model for agent systems. Our research findings, including distillation, sparsity, and evolutionary model merging, are being prepared for publication. Additionally, we are advancing the autonomous agent ‘AI Scientist.’”

“We are developing a multi-modal foundation model for fully autonomous driving. In this program, we created 'HERON,' a vision-language model with 73B parameters, delivering Japan’s top-level performance. The model and datasets have been made publicly available.”

"We developed two specialized LLMs in this project: one for generating knowledge graphs from natural language texts and another for performing logical reasoning to answer questions using knowledge graphs. These models achieved world-class performance benchmarks in Japanese knowledge processing. Going forward, we plan to further develop Knowledge Graph-Enhanced RAG and 'Takane', a Japanese-language large language model (LLM) designed for secure enterprise use."

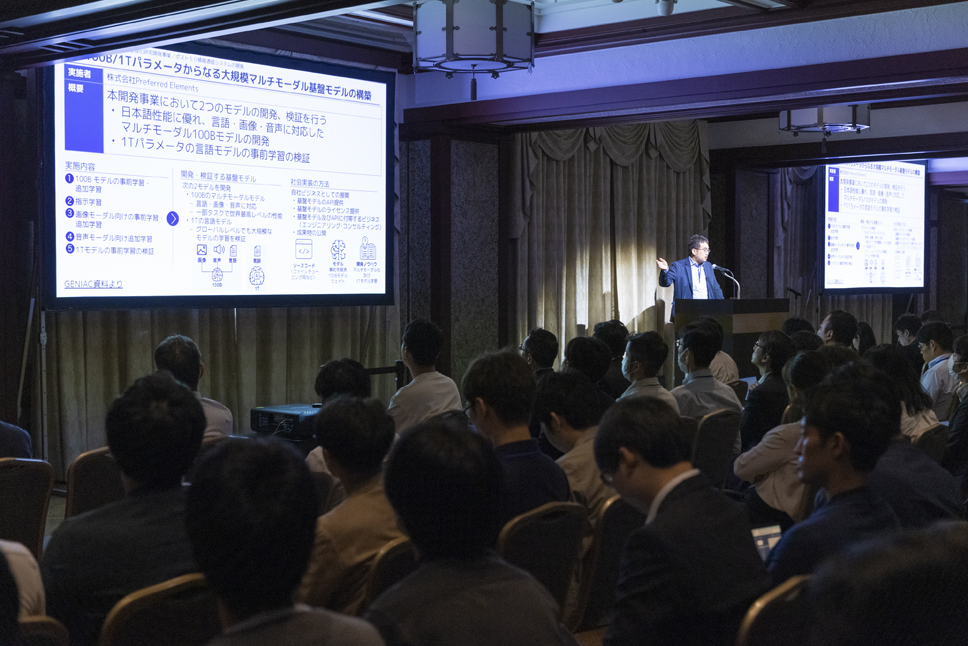

“We established a technological foundation for developing competitive large-scale multi-modal foundation models in Japan, with 100B/1T parameters. We also released our SLM ‘PLaMo Lite’ for edge devices, with ‘PLaMo Prime’ scheduled for imminent release.”

"We developed an open foundation model aimed at enhancing diverse Japanese language capabilities. Over a development period of approximately four months, we formed a team of 229 members and a development community of over 3,000 participants," said Kawasaki.

"As a result of developing the 8×8B model, we achieved top domestic performance in dialogue and composition capabilities," said Hatakeyama.

Expectations from Hyperscalers and Reflections on the First Cycle

After the presentations by the selected developers, hyperscalers who provided computational resources shared their summary comments.

"The Transformer, which forms the basis of foundation models, has shown dramatic accuracy improvements with increased parameter sizes. However, I believe the next era will require designing models with a balanced approach, considering parameter sizes, dataset dimensions, dataset quality, and computational costs. This is where the individuality of each foundation model developer will shine. For instance, I was deeply impressed by the Tanuki-8B model developed by the Matsuo-Iwasawa Laboratory at the University of Tokyo.

Moving forward, I think there will be increasing demand for specialized foundation models, even in fields requiring advanced expertise.

From the presentations today, I felt that Sakana AI's emphasis on the importance of 'knowledge' and the Matsuo-Iwasawa Laboratory's focus on 'building a developer community' epitomize the achievements of GENIAC. Broadening our perspective on the relationship between Japan and foundation models, I believe that future challenges will involve developing foundation models tailored to the unique characteristics of the Japanese language and diverse industries. The knowledge cultivated within the GENIAC community will enable developers to take the lead in driving innovation."

"Generative AI has been a significant trend over the past two years, but I believe it is more than a passing fad—it is a transformative technology that will drive societal change. In particular, foundation model development hinges on effective data utilization and the ability to generate greater value.

Globally, the trend in this field includes not only text-based applications but also handling multimodal inputs such as voice and images. Furthermore, we are seeing increased diversity in AI models, referred to as 'multi-modelization.' In today’s presentations, I felt that specialized LLMs like Turing’s fully autonomous driving model exemplify this trend.

We are moving toward a world where no single general-purpose AI dominates but rather models are used in the right place for the right purpose. In addition, the shift from human-to-AI interactions to collaborative tasks performed by multiple AIs, known as 'multi-agent systems,' will accelerate the resolution of societal challenges through hyper-automation.

Looking back at GENIAC's first cycle, I see that many developers shifted their focus from general-purpose models to specialized models. Kotoba Technologies' speech foundation model and Stockmark's approach of focusing on internal business challenges are great examples. Each is driven by a strong mission to solve societal issues, and I believe these efforts will continue to expand. Microsoft will remain committed to supporting innovation through computational resources, development platforms, and data platforms."

"I understand that GENIAC is built on three pillars: computational resources, data, and knowledge sharing. These resources are particularly significant for generative AI research, which demands large-scale computational power, and I believe it is highly meaningful that these are being provided to Japanese companies.

At NVIDIA, we have a culture of globally sharing important weekly reports, and our CEO, Jensen Huang, often provides direct feedback. This openness in communication enables us to capture global trends from a broader perspective.

Research and development of large-scale language models are also evolving rapidly. Training and inference for generative AI are akin to high-performance computing (HPC) tasks, requiring substantial infrastructure and specialized knowledge in large-scale distributed training. For some companies, keeping pace with these demands can be challenging.

Amid these circumstances, GENIAC has facilitated international recognition of Japan's technological contributions, and I believe this initiative has significantly strengthened the foundational research capabilities of the country."

At the end of Part 1, Mr. Watanabe from METI explained the achievements and future challenges of GENIAC. He highlighted that the program had successfully strengthened Japan’s foundational capacity for generative AI research and development and initiated a virtuous cycle promoting development and utilization. Looking ahead, he noted that the next challenges include transitioning from seed-based research to improving model performance tailored to specific needs and expanding data utilization.

Concluding Part 1, Mr. Takada from NEDO expressed gratitude to hyperscalers who planned and coordinated computational resources and addressed issues during the program, as well as external experts who participated in the evaluations, despite the limited six-month timeline.

Five GENIAC Awards Presented to Participating Organizations and Individuals

Awards Ceremony in Part 2

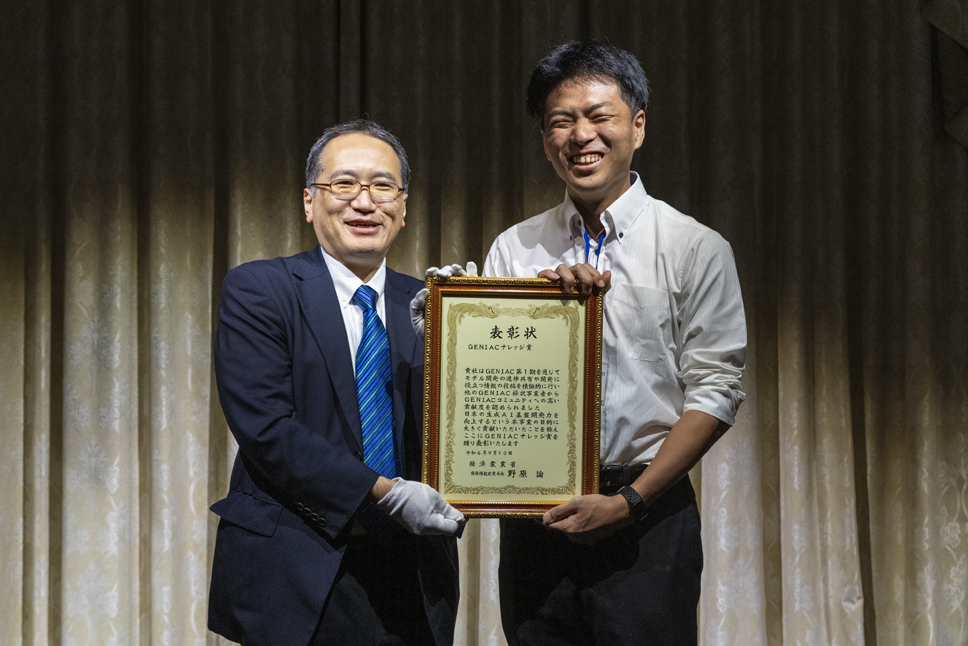

Part 2 began with a toast by Satoshi Nohara, Director-General of the Commerce and Information Policy Bureau, Ministry of Economy, Trade and Industry (METI). The awards ceremony, honoring contributions to the program’s outcomes, was presented by Director-General Nohara. Five awards were decided based on peer reviews conducted by participating developers, and certificates were presented to the recipients.

New Model Award: Sakana AI Inc.

Sakana AI received the New Model Award for developing a highly innovative model that earned high praise from other developers.

"Even when giving our best efforts, things don’t always go as planned. That’s why I’m truly pleased that we were able to achieve three successful outcomes." (Akiba)

Technical Model Award: Preferred Elements, Inc.

Preferred Elements was awarded the Technical Model Award for tackling technically challenging developments that were highly regarded by other developers.

"I am grateful for the recognition of the team’s hard work in achieving this outcome. We look forward to delivering these results to society as soon as possible." (Okanohara)

Community Award: Yusuke Oda, National Institute of Informatics (NII)

Yusuke Oda received the Community Award for actively participating in GENIAC community events and contributing to its growth and development.

"It is an immense honor to receive this award. I will continue to dedicate myself to contributing to the community." (Message read on behalf of Oda, who was absent)

Knowledge Award: Preferred Elements, Inc.

Preferred Elements received the Knowledge Award for their significant contributions to knowledge sharing, particularly regarding the progress and insights in model development.

"LLM development spans a wide range of research areas, and we believe information sharing is vital for progress." (Suzuki)

GENIAC Presentation Award: KK Kotoba Technologies Japan

Kotoba Technologies Japan won the GENIAC Presentation Award for delivering a clear and innovative presentation that resonated strongly with the audience during the results session.

"We are grateful that, even as a small developer, we were able to demonstrate the potential of speech foundation models and gain such strong support." (Kasai)

The GENIAC Cycle 1 Final Presentation Session successfully featured developer presentations, summary comments from hyperscalers, an awards ceremony, and various other events without any issues. Moving forward, preparations for the launch of Cycle 2 are underway. Please stay tuned for future developments in GENIAC's activities.

GENIAC Top PageLast updated:2024-02-01